Enhancing SEO & Crawlability for Static Sites with Edge-Side Rendering

Pioneered a custom Cloudflare Worker solution to deliver pre-rendered content to search engine bots, significantly improving SEO and indexability for a dynamic static portfolio site without a costly stack migration. This complex solution was developed and debugged independently, leveraging AI assistance and self-taught troubleshooting techniques, despite lacking a Computer Science background.

Tools Used:

Situation

As a marketing and brand management student, my goal was to build a portfolio that was not just a digital resume but a demonstration of my skills across languages, cultures, and technologies. I successfully launched shainwaiyan.com, a fast, multilingual, mobile-optimized static site powered by a headless CMS (Strapi), GitHub API, and dynamic client-side rendering (CSR). This approach kept the site lightweight for users and utilized free-tier deployments. The site worked beautifully for human visitors.

Task

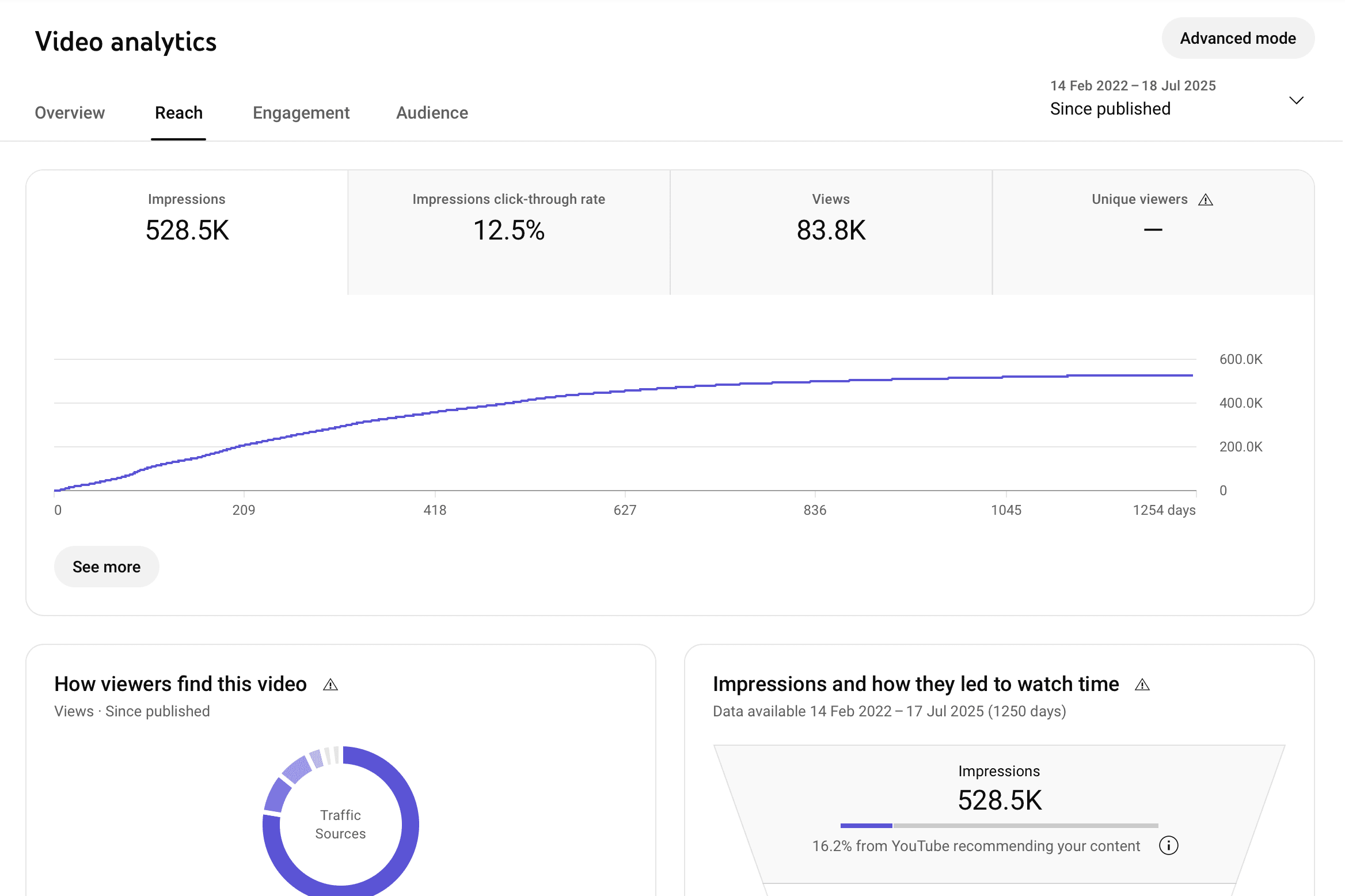

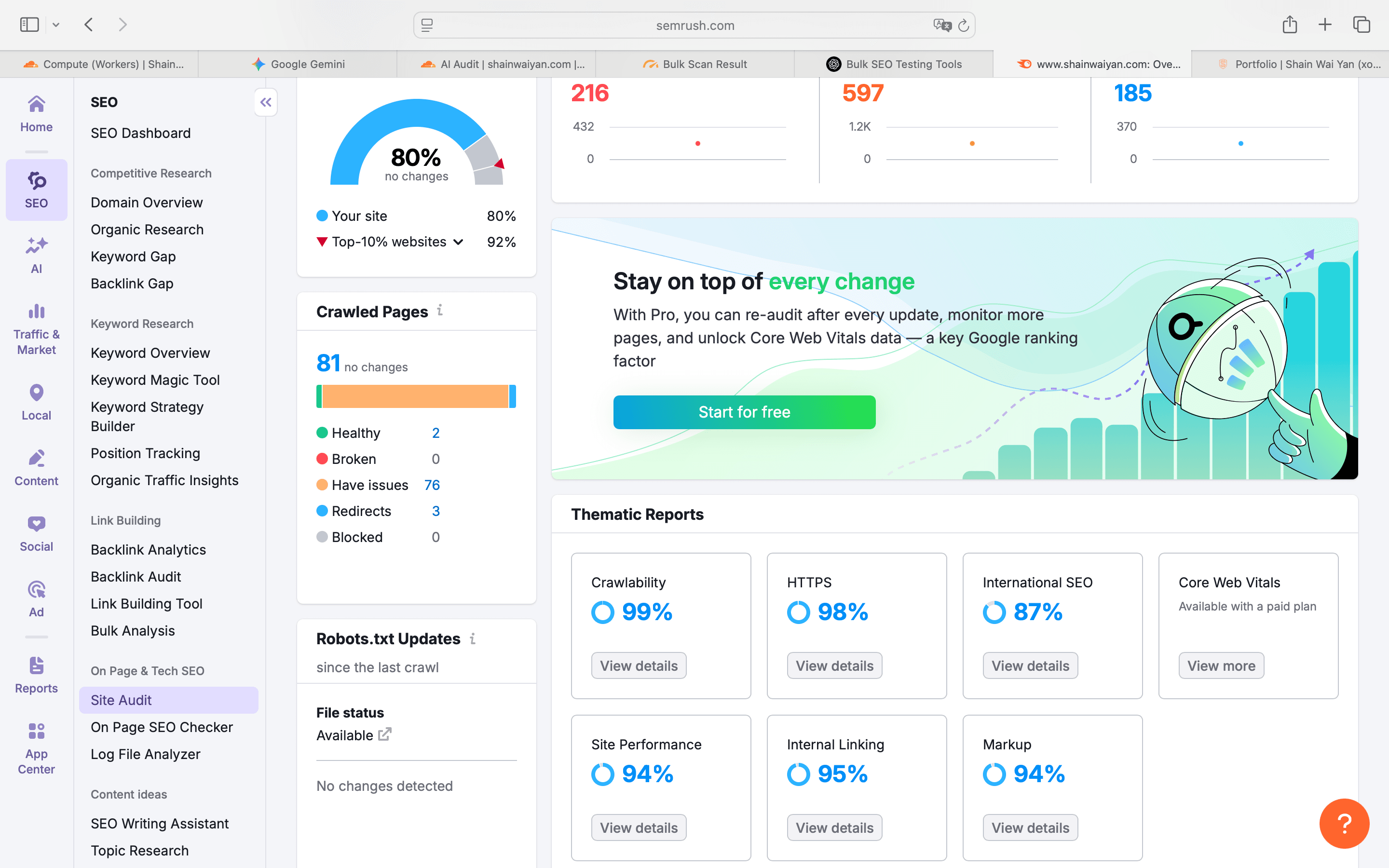

The critical challenge emerged during SEO analysis: search engine bots like Googlebot and Bingbot were snapshotting the page before the dynamic content loaded. This meant that while the site appeared fine to SEO tools, essential content like business plans, marketing projects, marketing in motion, blogs and certifications were not being indexed. Despite clean structure and keyword optimization, the site was effectively invisible where it mattered most for search rankings. The conventional advice was to rebuild the entire site using Server-Side Rendering (SSR) frameworks like Next.js or Nuxt, a significant undertaking I wanted to avoid while retaining my static-first stack, free-tier deployment, and existing Cloudflare-based workflow.

Action

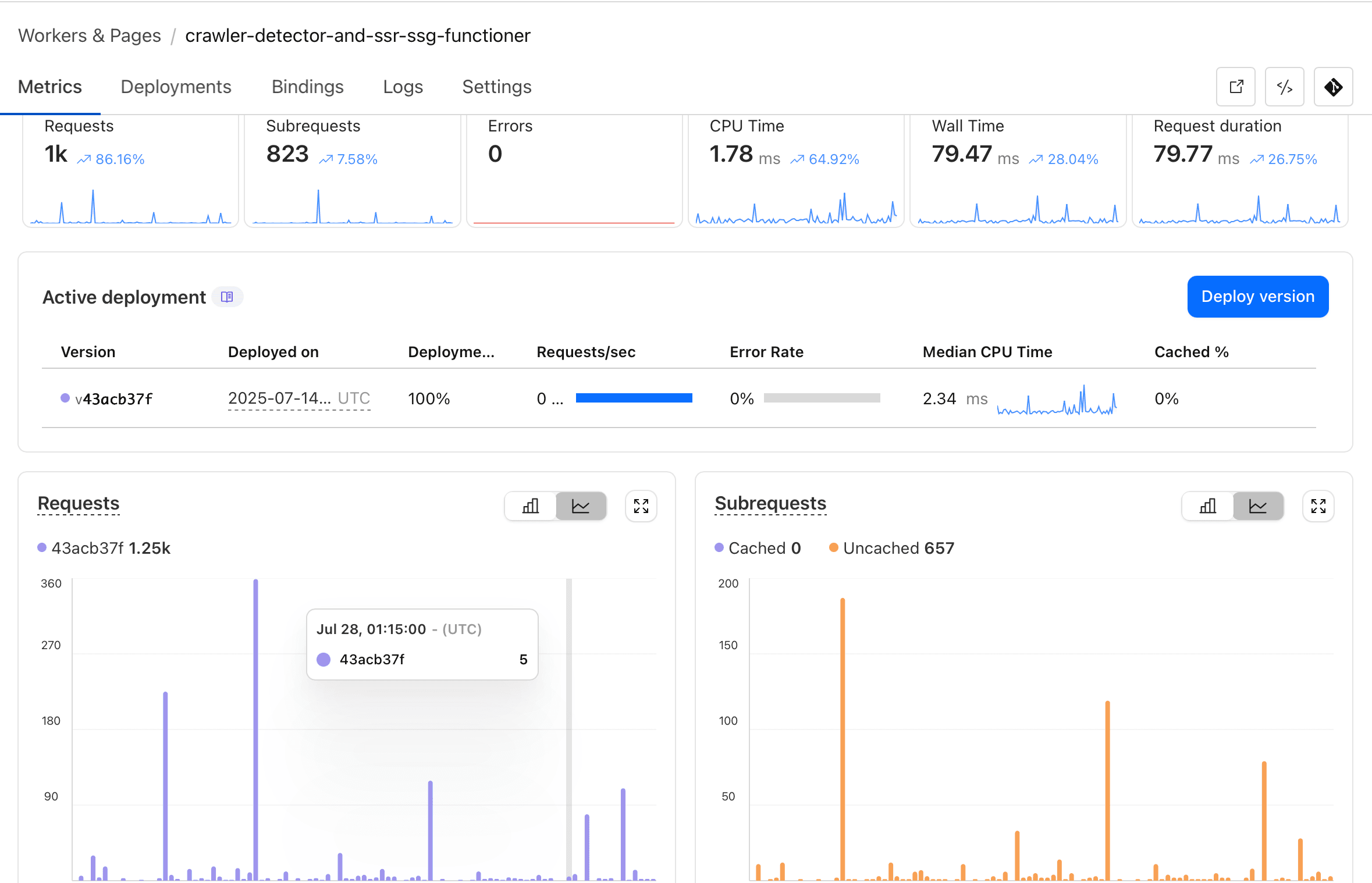

Instead of rebuilding, I devised and implemented an intelligent, edge-layer workaround: an Enhanced Bot Rendering Cloudflare Worker. It's important to note that, as a non-Computer Science graduate, I typically don't write code. I developed this sophisticated Cloudflare Worker with AI assistance, using it as a powerful tool to bridge my strategic vision with technical execution. My role was then to meticulously ensure its functionality and troubleshoot any issues. This often meant diving deep into debugging on my own, leveraging tools like curl commands to inspect HTTP requests and responses, analyzing network traffic, and iterating through countless logs to pinpoint errors. This hands-on, self-taught troubleshooting was crucial in identifying the hidden conflict with my existing SSR worker that caused two days of frustration.

The worker was designed to act as a sophisticated gatekeeper for all incoming traffic:

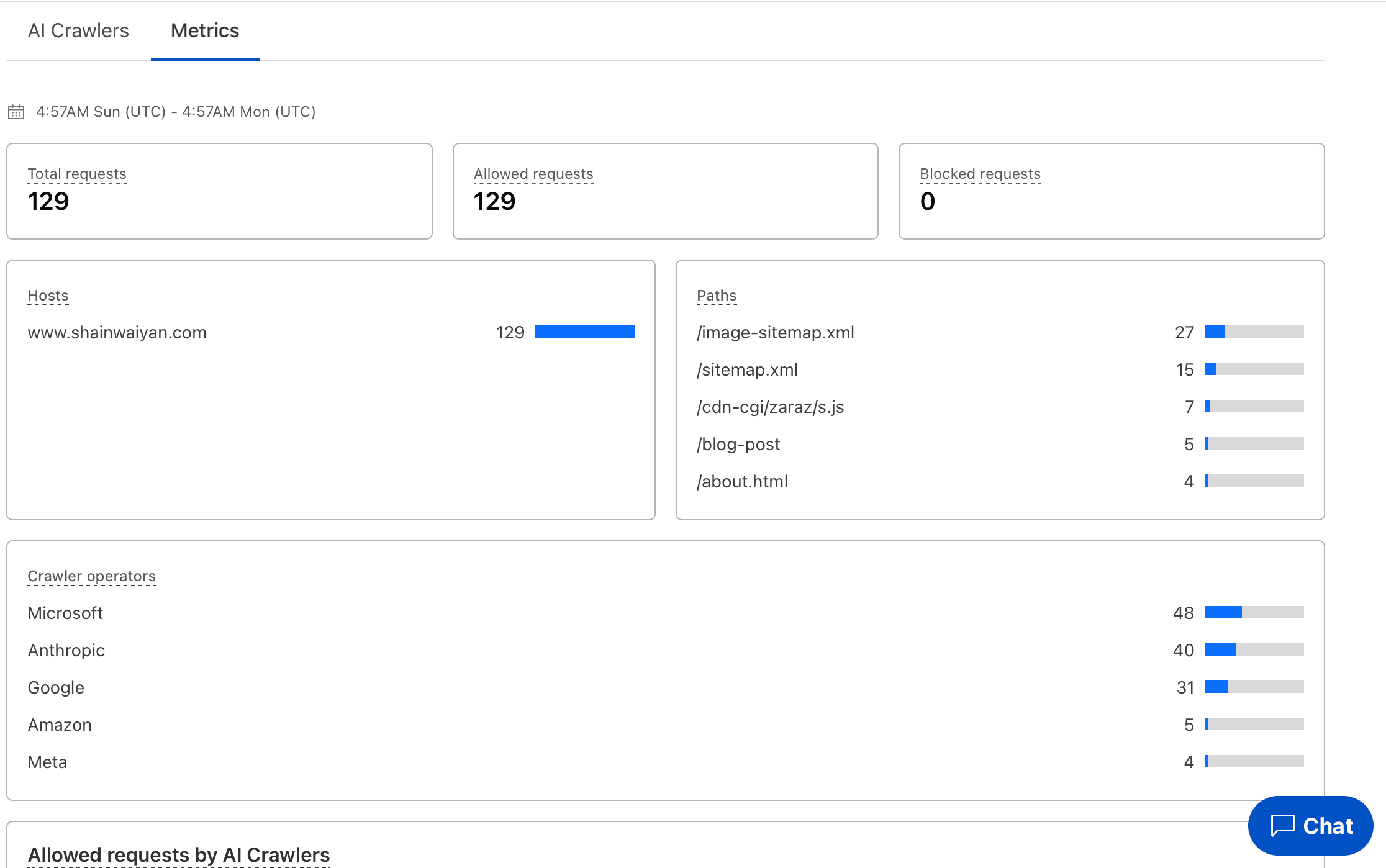

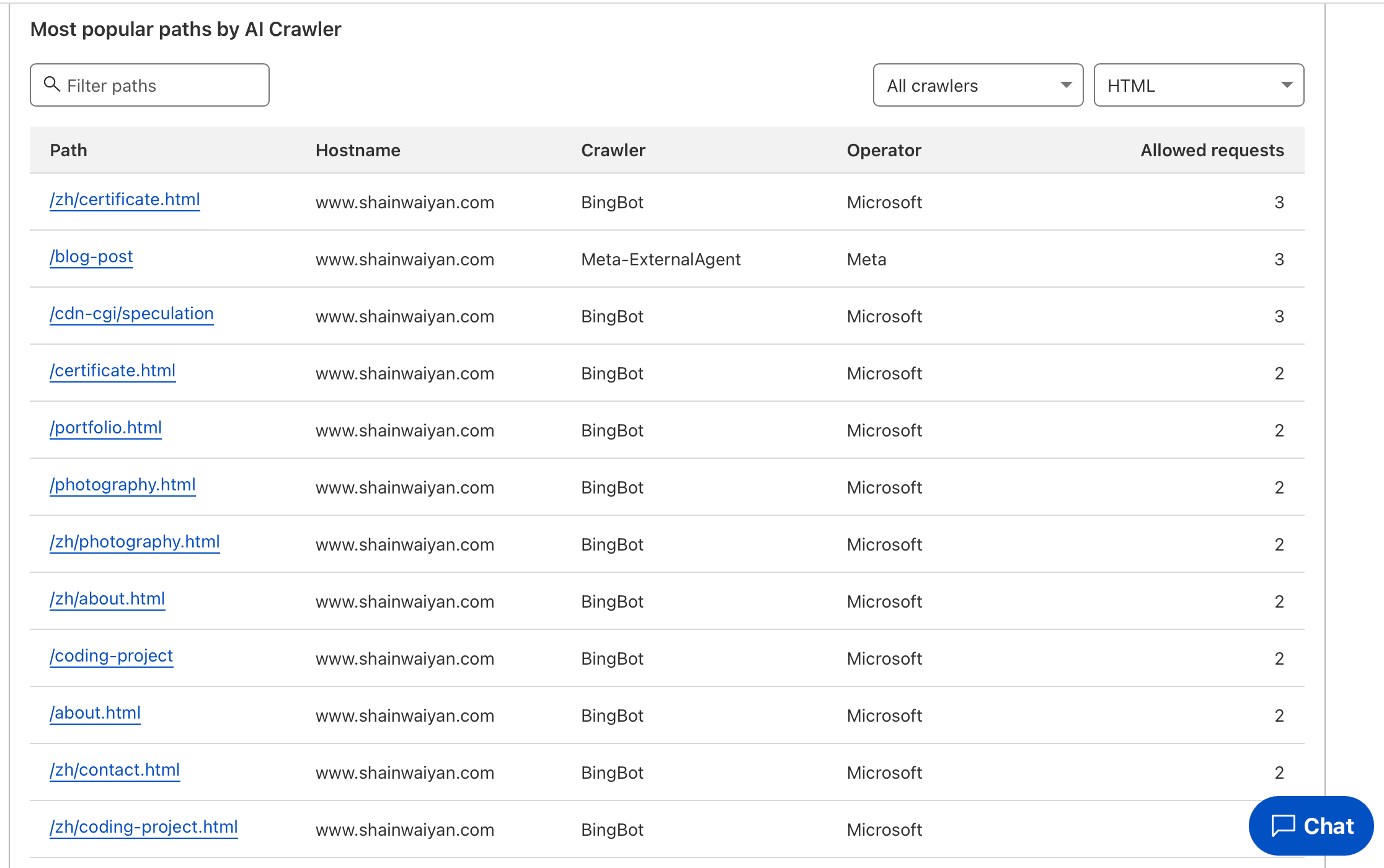

Bot Detection: The worker meticulously checks each request's user agent, maintaining a comprehensive list of patterns to identify various crawlers, including search engines, social media, and AI bots.

Conditional Rendering Logic:

For Bots: If a recognized crawler is detected and the request targets a pre-renderable path, the worker springs into action. It fetches the requested HTML template, retrieves all necessary dynamic API data from Strapi and GitHub, and then precisely injects this data into the final HTML structure using specified CSS selectors. It also applies a suite of SEO enhancements (like structured data, meta tags, image optimization) and robust security features (CSP, sanitization) before sending the fully formed HTML to the bot.

For Users: If the request is from a normal user, the worker simply passes it through to the origin, preserving the site's high-performance static delivery and client-side rendering experience.

Performance & Reliability: The worker was configured with robust caching strategies for bot-rendered pages, performance budgets to ensure fast loading for crawlers, and error handling mechanisms to maintain consistent service. It also included a scheduled task to pre-warm the cache for critical pages, ensuring they are always ready for bot crawls.

Result

After deploying this custom Cloudflare Worker, the impact was immediate and profound. My site is now fully indexed on Google and Bing, with all dynamic content visible to search engines. This was achieved without rebuilding the entire site, incurring no new backend or server hosting costs. The solution successfully enhanced bot crawlability and SEO performance through intelligent, cost-effective rendering at the edge, validating the principle: "When something doesn't work the way it should, find a way it could." This project powerfully demonstrates my ability to solve complex architectural problems, leverage cutting-edge tools (including AI), and independently debug technical challenges, even without a traditional CS background.

Project Visuals

Shain Studio

Shain Studio